When AI creates art

Machines can be creative, says Daniel Bisig, an AI expert who is conducting research at an art college. But they still lack a key ability to become real artists.

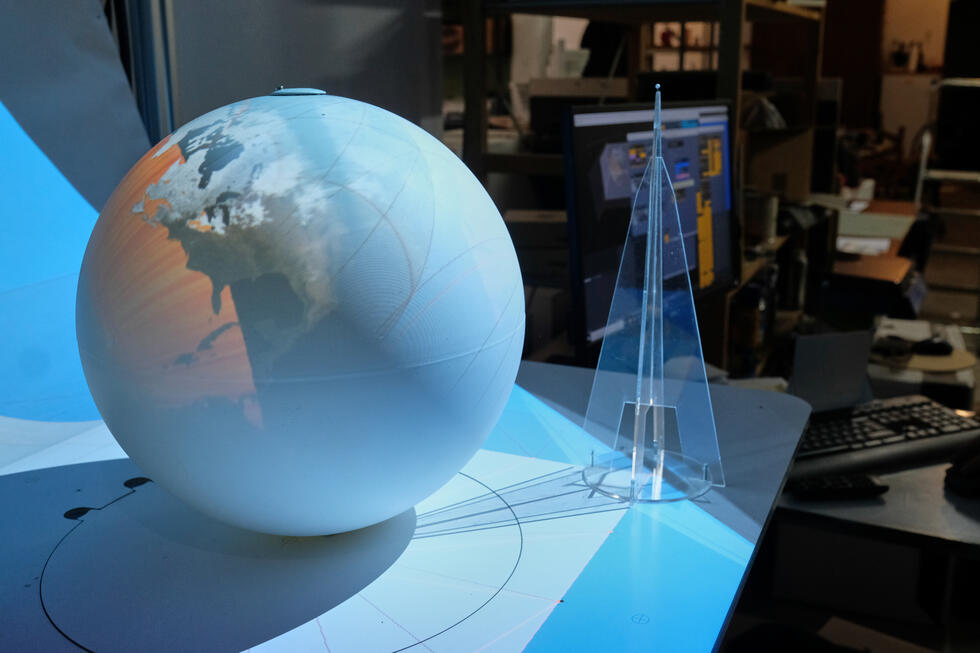

Everything is black: the walls, the cables, the audio mixers, the amplifiers, even Daniel Bisig’s T-shirt. In the lab that resembles a sound studio, the 51-year-old is busy setting a robot in motion. The venue: the Institute for Computer Music and Sound Technology at the Zurich University of the Arts. The robot is part of Daniel Bisig’s research at the institute, which focuses on the interface between technology and musical practice. The scientist is investigating how artificial intelligence (AI) can be used in music, but also in other arts. In short: How can intelligent machines create art?

Are they even capable of this?

Daniel Bisig: The more intelligent machines are, the more likely it is possible to state: Yes, they can create art. Because more advanced machine species are more autonomous and are thus capable of creating something original – a key characteristic of creativity. If the result of a machine’s creative work is also aesthetically appealing, we can tick the box on a second important criterion. However, this applies only from the perspective of us humans. From the machines’ point of view, no machine is creative and hence capable of creating art.

From the machines’ point of view?

Yes. Although this perspective is currently severely underdeveloped. Because no AI has yet reached the point where it can observe and assess itself. Consequently, AI also lacks the ability to judge its own creations; for the machine it is of no significance whether the results of its work are good or not. But this self-reference represents another fundamental characteristic of artistic creation.

You are currently working on a project that aims to teach robots precisely this ability …

Correct. I want to find out whether machines will ever be able to develop anything resembling aesthetic sensibility, and where its origins could lie. I’m interested, for example, in whether preferences develop among the various robots as to what they perceive as appealing. Whether the robots pass these preferences on to the next generation. And whether groups develop.

Courting robots

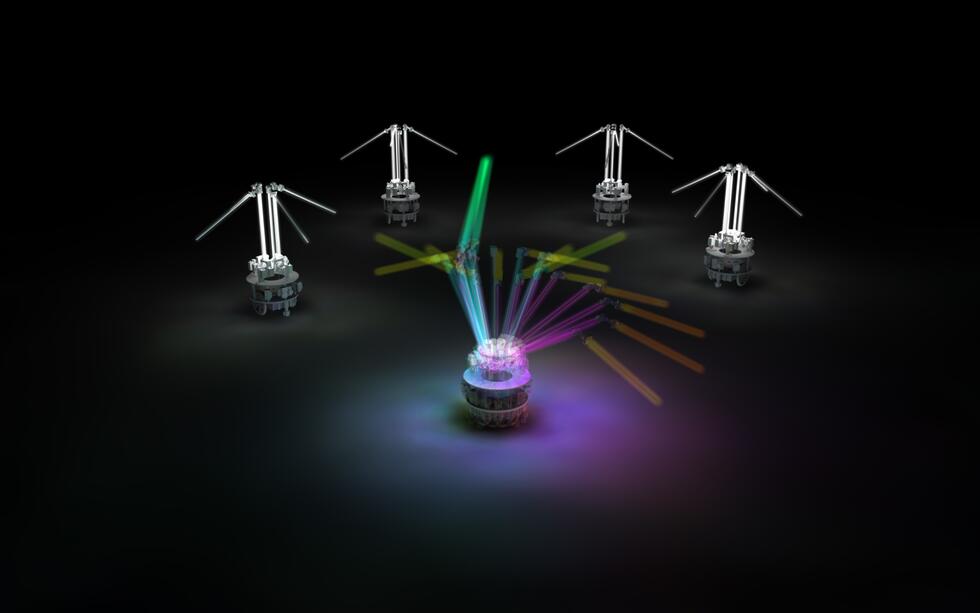

It has already learned to rotate around its own axis. But only slowly. He ought to be able to twirl around, perform a mating dance, and strike poses. To impress. “This research project is still very much a work in progress,” says Daniel Bisig and commands the robot to stop its tentative rotations. Daniel Bisig’s goals: To find out whether machines will ever be able to develop anything resembling aesthetic sensibility. To this end, he wants to build several robots that can make advances towards each other. For example in the form of dance rituals. Or by taking on different colors. Resembling a primordially biological mating ritual.

You are not just researching all this in theory.

Precisely, our institute generally takes a very practice-oriented approach; we even build our own prototypes in our workshop. One of our motivations is to support performing artists using artificially intelligent tools, for example by automatically generating music in a dance production. In this manner, AI is already widely used in the arts. However, these are comparatively dumb machines.

Why dumb?

These machines only learn relationships within the framework of supervised learning – for example, the connection between a given movement on stage and a certain sound that is to be generated simultaneously. Thus, the AI is only capable of imitation – the idea originates with humans who also provide the necessary data to implement it. Much more interesting, however, is another learning principle: what is known as reinforcement learning. Here, the machine only knows whether a certain behavior is successful or not. As a consequence, it reinforces successful behavior and refrains from pursuing other types of behavior.

Intelligent dancing shoes

Daniel Bisig shows us a pair of dancing shoes: “Perfectly ordinary”, he says, “but with just a tiny bit of artificial intelligence”. To be precise: They are equipped with smart pressure sensors. These sensors detect certain movements of the dancers’ feet and associate them with the sounds they are intended to produce. The connections between dance movement and sound are predetermined, but the shoes first have to learn them. The approach: supervised learning. Daniel Bisig explains: “In this way, an acoustic component is added to the dance performance without requiring any additional effort.”

AI: The most important concepts

Artificial intelligence (AI) can be strong or weak – in theory. Because strong AI, which is equal or even superior to human intelligence, still does not exist. Weak AI means specialized artificial “expertise”. And machines can develop this knowledge by using methods such as machine learning (learning from experience), supervised learning (for example, imitation), unsupervised learning (unmonitored learning, by recognizing previously undiscovered patterns), or reinforcement learning (success-enhancing learning); which allows machines to make decisions much more independently. Another aspect of machine learning is currently the subject of considerable debate: deep learning. This method that uses artificial neural networks comes closest to how the human brain learns.

And why do you describe this learning method as being more exciting?

Because this approach leaves the AI to its own devices and thus enables it to become more autonomous. Such machines have the potential to be creative. But this also has its drawbacks.

Which are?

Our ability to control such AI is very limited. It is very self-referential, barely interactive, and consequently potentially antisocial. To the public, such machines are likely to be of little interest. The future belongs to clever machines that are also social.

Our ability to control such AI is very limited. It is very self-referential, barely interactive, and consequently potentially antisocial.

So far we have talked about two areas of application of AI in the arts: as a tool or in the role of the actual artist. Are there any other potential possibilities?

Yes, there are. Intelligent machines can also be a very valuable tool to help artists reflect on their work: When teaching a robot to perform tasks, for example, artists have to think very carefully about their own approach and their decision-making processes. In this way, machines can also be relevant in entirely conventional art categories. Brainstorming sessions with machines are another potential application. And another: Artists who create their artwork in collaboration with AI. Such as the now deceased computer arts pioneer Harold Cohen with his painter algorithm Aaron.

Such constellations do not exactly make it any easier to determine who the author of a work of art actually is …

As long as the public demands the name of a single artist, that is true, yes. However: The fields my research focuses on, for example visual arts, dance, music, usually involve collectives anyway. And even without the use of AI, these often break apart due to copyright issues. However, there is a new question that will arise. What if the AI is not given credit and starts fighting for acknowledgement?

A realistic scenario?

Let’s put it like this: We will certainly have to discuss when an AI should be given the rights of a physical person. And soon.

Incredibly bright: the sunlight on the roof terrace of the Zurich University of the Arts, where this interview comes to a conclusion. Bright is the mind of Daniel Bisig, who graduated with a doctorate in molecular biology, worked as a web designer for a while, and spent many years researching at the Artificial Intelligence Laboratory of the University of Zurich. “Chaotic," is Daniel Bisig’s carefree understatement regarding the path that ultimately led him to the arts 15 years ago. Daniel Bisig: “I have always been fascinated by how computers can be used as tools to create alternative realities.” Incidentally, he acquired the technical skills required to build his prototypes by means of learning by doing. Natural intelligence.

Antisocial geniuses

A walk-in installation – yes and no. An immersive lab is a platform that makes artificial intelligence (AI) tangible. “Zeta” for example. This AI improvises its own music along to a live concert that takes place in the marquee-like center of the installation. Another AI holds up a mirror to you; if you touch the installation, either your own reflection appears with a delay, or you see an immediate reaction, but from the face of a stranger. What it does is decided by the AI, the human being loses control. Daniel Bisig: “The more intelligent machines are, the less we are able to control them. This is exactly what these projects by two different artists illustrate perfectly.”

Written by:

Photos: Ralph Hofbauer & ZHDK (Sensor shoes and video dancer)