What are foundation models and why are they so useful in NLP?

In recent years, the domain of Artificial Intelligence (AI) and Natural Language Processing (NLP) was taken by storm by so-called “foundation models”. Even if you are not following AI updates, you might have seen pictures generated by the recently released DALL-E-2, Jasper, Stable Diffusion or even the latest Lensa AI app that is flooding social media platforms with AI-generated “avatars”. Maybe you read claims that ChatGPT can automatically produce text on a near-human level. But foundation models are helpful for much more than entertaining one’s followers on social media: they are used by many companies to solve their data-related tasks automatically.

Language models such as ELMo or BERT are making AI and data-driven solutions accessible to many more companies than what was possible just a few years ago. Domain-specific models branching from the larger foundation further facilitate the development of effective solutions for fields that require complex and specific terminology (such as BioBERT, biomedical BERT; SciBERT, BERT for biomedical and computer science domains; clinicalBERT; Legal-BERT and others). What’s more, models of the BERT family are also available in a variety of languages, allowing the development of AI solutions that aren’t limited to the use of English.

This all sounds very promising but, you may ask, which tasks can actually be solved by using NLP?

Although NLP is becoming known and even trendy now, it is still a fairly novel domain of knowledge, and people often don’t know its true capabilities. Many companies don’t feel ready to use NLP simply because they are not aware of how exactly they could benefit from it.

Here’s a handful of real use cases:

- Do you collect customers’ feedback as free text (notes on a CRM or through online product reviews) but struggle to get value out of it? NLP applications such as Sentiment Analysis can help you analyze that feedback automatically and understand what is perceived as good or bad by your clients.

- Does your customer-support team get tons of similar requests from clients? Instead of manually sorting them, you can save your team’s time by using a text classification model to automatically define the relevant department for each request. Still too many emails? That same model can also send customized automatic replies, based on the content of a received email, to further reduce the load on the support team.

- Do you have an online platform with a vast catalog of items? Your users might enjoy getting recommendations of items similar or relevant to what they already like or purchase thanks to an NLP-powered recommendation engine.

- Are you an insurance company? Then you could use Entity Extraction in claim processing, to extract relevant information and fill out claim forms automatically. So much time could be saved!

- Are you a healthcare provider? Entity Extraction can help you too, by turning unstructured health records into structured data, which can be used to find patterns and predict the development of diseases in each individual case.

You might be wondering: are NLP algorithms reliable enough to trust them to perform such tasks? We wouldn’t be in this business if we didn’t confidently believe that they are. However, don’t expect AI models of a certain complexity to ever reach 100% accuracy in their predictions (not even humans do, though). The major benefit of using AI isn’t to avoid all errors, but rather to let it automatically handle common and repetitive scenarios (automating text-based tasks in the case of NLP models), thus reducing the workload of human experts who can finally spend their time and knowledge to handle complex cases instead.

The good news is that since the introduction of foundation models, there have been huge leaps forward in the accuracy of NLP tasks, reaching near-human performance for many key applications.

So, what exactly are foundation models in AI?

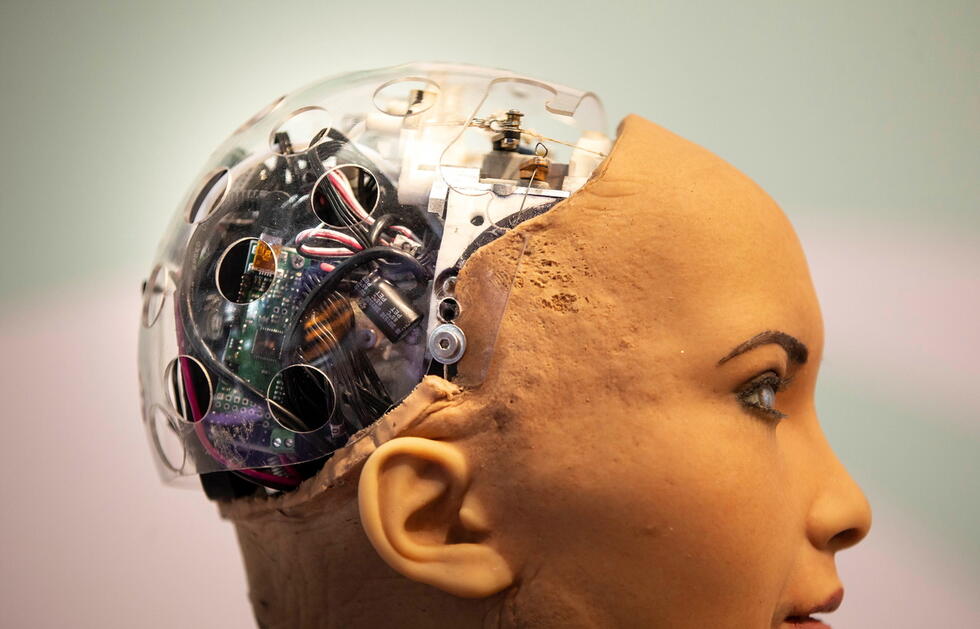

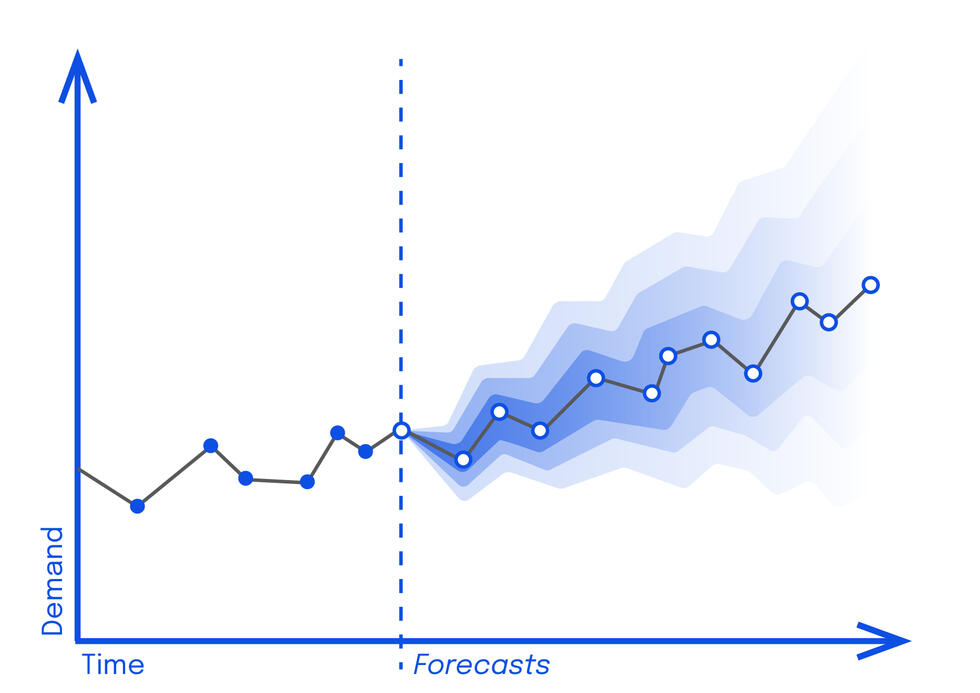

An Artificial Intelligence model is a program trained to emulate logical decision-making based on available data, with the goal of performing specific tasks. A task can be anything from predicting weather based on historical data, to categorizing news articles into classes such as sports, politics, music, etc.

The model can be trained in a few ways, mainly through:

- Supervised learning, where the data is labeled and the model recognizes patterns in the text that correspond to each unique label (ie. In news articles, words such as “football” or “tennis” are likely to belong in a “Sports” label);

- Unsupervised learning, where on the contrary no labels are available in the dataset and the model must learn how to cluster information in groups based on the similarity between items (it recognizes patterns in the text to group data together);

- Self-supervised learning, which also doesn’t require manually labeled data, but uses the data itself to create the labels (which is particularly useful since the availability of labeled datasets is scarce but extremely valuable). Stay tuned for our article next month, all about a new and powerful use of this training method in Computer Vision applications.

Why is that so special?

By predicting masked words in a large set of texts, a model learns a lot about how language works, both on the level of structure (grammar) and meaning (semantics). In our example above, the model would understand that: the masked word is a noun; it is the name of a language because it follows the verb “speak”; and it would be the language that matches the broader meaning of the sentence, e.g., the country of origin of the speaker. Thanks to this, it would learn to predict the word “French”.

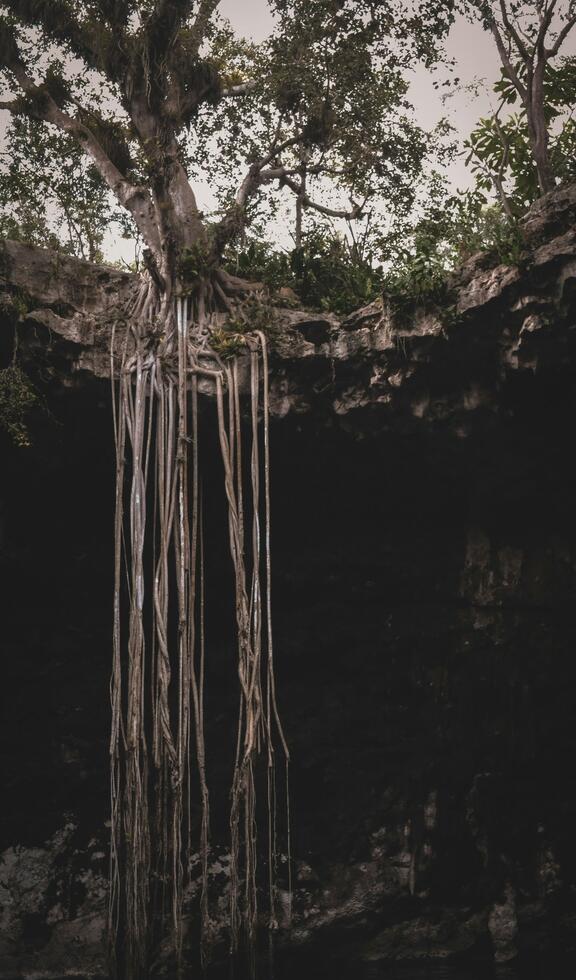

When talking about language applications, a model is trained on a lot of raw texts, usually from various sources on the Internet. “Raw” means that the texts were not manually labeled by humans — so thanks to this Self-supervised learning method, we do not need to spend resources on a long and expensive annotation process.

Thanks to the Internet we can obtain a large amount of text fairly easily to train a model. If we want to train it in a specific domain (e.g., legal, clinical, or scientific), we can often find texts for that domain on the web as well. It sounds trivial, but a decade or two ago this wasn’t the case, and the training of such large models was impossible.

What brings it to the next level, is that the information about the language captured by the model becomes the foundation to solve more specific tasks, such as text classification or information extraction, by fine-tuning it on a relatively small set of labeled data and leveraging Supervised Learning methods more efficiently.

Why did foundation models prove to be such a breakthrough in NLP and AI?

Artificial Intelligence covers several domains, but some of them have benefitted the most from the introduction of foundation models. Three prominent examples are: Natural Language Processing (as we’ve seen so far, it deals with all text-based tasks); Automatic Speech Recognition (for transcribing or translating speech data); and Computer Vision (anything involving the visual mediums is covered in this domain, such as detecting objects in images and videos).

NLP existed well before foundation models came around. However, it wasn’t so common and widespread: few companies would attempt to use NLP to solve their text-related tasks. When foundation models that encode a lot of language knowledge did not exist, developing NLP solutions required human developers to somehow code that language knowledge. It could be done in the form of lexical resources, rule-based systems, and manually crafted features that would be fed into machine learning models. Such approaches required a team of experts combining technical and domain knowledge (and often language knowledge), took a lot of time and effort to develop, and were extremely hard to scale or reuse in another domain or for another task. This means that few companies had the resources and experts required to develop such approaches.

NLP solutions based on foundation models outperform even the most carefully crafted algorithms. This does not mean that we do not need experts with technical, language, and domain knowledge anymore — we still do. But nowadays, foundation AI models are able to solve a wide range of cases, while experts can focus on developing more complex solutions for the most complex applications.

Who can develop AI solutions using foundation models?

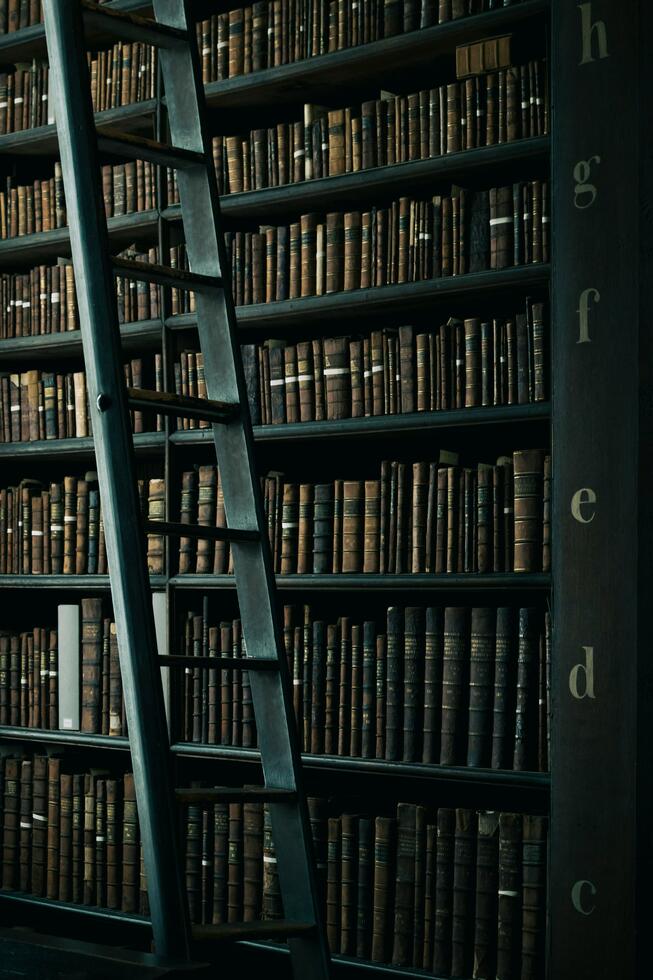

Foundation models are a trendy topic in the data science domain at the moment. This means that the web is full of tutorials and crash courses that claim to teach you “how to train a model for <NLP task> in just a few lines of code”.

Thanks to the fast development of NLP, nowadays there are several software libraries that allow for easy access to foundation models and the development of customized models by fine-tuning them on specific tasks and data with less code. However, this doesn’t mean that minimal coding knowledge is enough to develop AI models.

First of all, running those “few lines of code” will produce some baseline model, that can have decent performance but can’t effectively solve a business case — you will need to go beyond the few lines of code that one can learn in a crash course. Experienced data scientists are able to identify the best model architecture to be used (e.g., select the suitable shape of the output layer for binary, multi-class, or multi-label settings), and choose from a wide set of available models (ELMo, BERT, DistilBERT, RoBERTa, domain-specific versions, etc.) while keeping in mind the computational requirements and the speed-accuracy trade-off to achieve the best performance.

Secondly, you will need to adjust the model to fit your objectives accurately, and that requires quality data. Even foundation models, as powerful as they are, will not show reasonable results if the data used for fine-tuning is messy. Crash courses and tutorials usually show how to develop a model on a nice and clean dataset, but real-world data is not like that: it always has noise, missing values, imbalanced classes… It might not even be obvious which data you can use to solve your task. A specialist with hands-on real-world experience can identify the data that is available and suitable, clean it, and prepare it to be fed into the model.

Lastly, preparing the data and writing a training script is not all it takes to develop an AI solution. You will need to identify and be able to use appropriate computational resources while developing and after deployment, choose appropriate infrastructure for deployment, host models and data, etc. These are tasks that require training that simply can’t be found in a data science crash course.

Sometimes, the best thing to know about foundation models is when not to use them. They are large, need a lot of memory, take substantial time to be trained, and are not environment-friendly. In some cases, NLP tasks are easier to solve without foundation models, or even without complex NLP at all.

We have defined AI models and foundation models specifically; we discussed how they are trained and how they can execute NLP tasks; lastly, we explained why developing and using these models isn’t for everyone even if complex problem-solving has become much more accessible.

Foundation models undoubtedly opened a new era in NLP, beating previous methods on a wide range of tasks. We would know — we have specialists in our team that worked on NLP development before these models, and others who have mastered their use since they were students. We helped many clients solve problems and boost efficiency by developing NLP applications with foundation models. For instance, we developed a model that extracts information from a specialized legal database of documents and helps the user write company purposes by presenting pre-filtered texts that are ready to use. Other examples are custom chatbots, virtual assistants, and automated email processes thanks to text classification. However, thanks to our experience and the many applications we have encountered, we also know when to advise our clients about solutions that might not include those models.

If you wanted to better understand foundation models, we hope to have clarified it and invite you to follow our page to be updated when we publish a new article. Next month, we will dive into Self-Supervised Training for Computer Vision applications.

Artificialy

Artificialy is a leading-edge center of competence that draws from the best scientific knowledge thanks to its links to renowned AI scientific institutions and attracts and retains the best talents in Data Science. Leading-edge know-how is paired with the founders' 25 years of experience in running projects with major firms and delivering measurable results to Companies.