Is your machine Self Learning?

Inrecent years, few technologies have evolved at the speed of Artificial Intelligence, and in particular at that of Neural Networks. This exponential growth has been achieved mainly due to two factors:

New, increasingly high-performance models and algorithms have been developed, thanks to which Neural Networks learn various tasks faster and more efficiently.

Ever-increasing computational power has allowed these Neural Networks to grow larger, absorbing, in turn, an increasing amount of information.

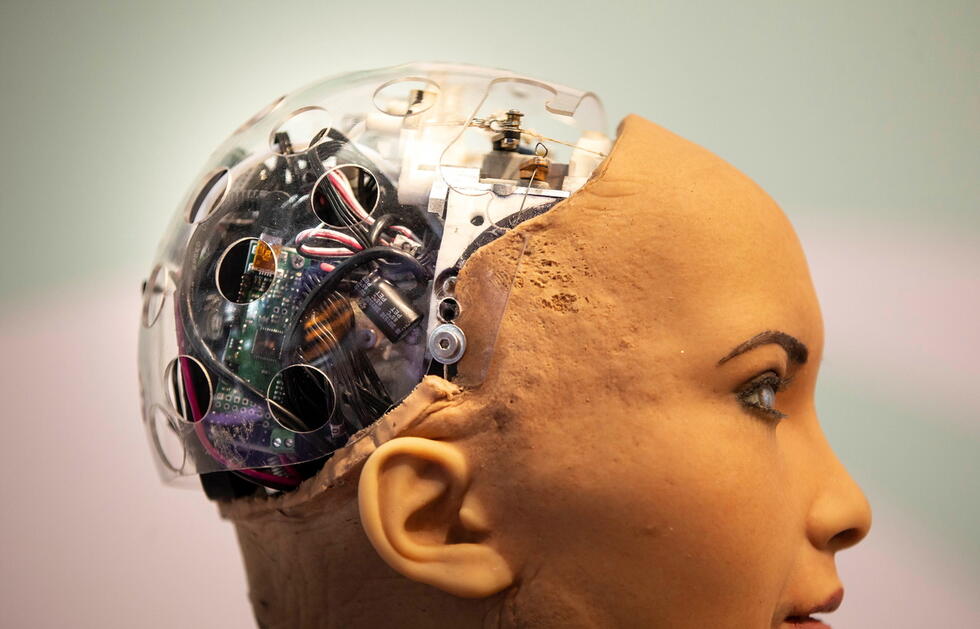

Although the computational capabilities achieved today are approaching those of a human being, these artificial Neural Networks are still a long way from performing tasks that are trivial for humans and even most animals. It is becoming evident, then, that the growth of the networks only in terms of size may not be enough for AI to come to understand or predict certain situations like humans do, overcoming current limitations. For example, an attentive driver who notices a pedestrian knows that the pedestrian continues to exist and move even if hidden by an obstacle, and can reasonably predict that the pedestrian might cross the road. In contrast, a neural network that fails to understand that if the “pedestrian” object disappears behind the obstacle continues to exist behind it, it cannot predict that it might soon reappear and pose a danger. As a result, to date, an autonomous driving car still cannot be totally reliable and guarantee that it will recognize and avoid danger in all possible cases.

There are heated debates among leading figures in the world of AI research about what is the correct approach to overcome these limitations.

One that seems to be among the most promising is Self-Supervised Learning, also supported by Yann LeCun, Turing Prize winner and Chief Scientist of the MetaAI team. The main feature of this method is that the neural network’s learning does not depend on a human providing it with thousands of already “solved” examples (as in the more widely used “Supervised Learning”, you can read more about this and other methods in our article about Foundation Models) but it learns autonomously, correcting itself.

Self-Supervised learning also allows the network to “learn” on its own additional information that enables it to identify objects and predict their future behavior.

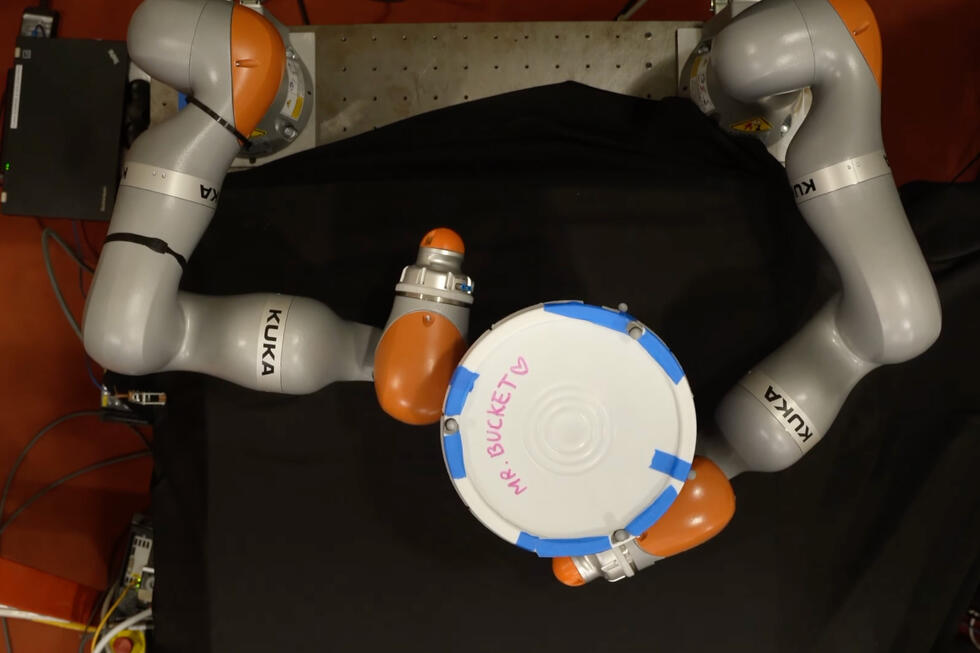

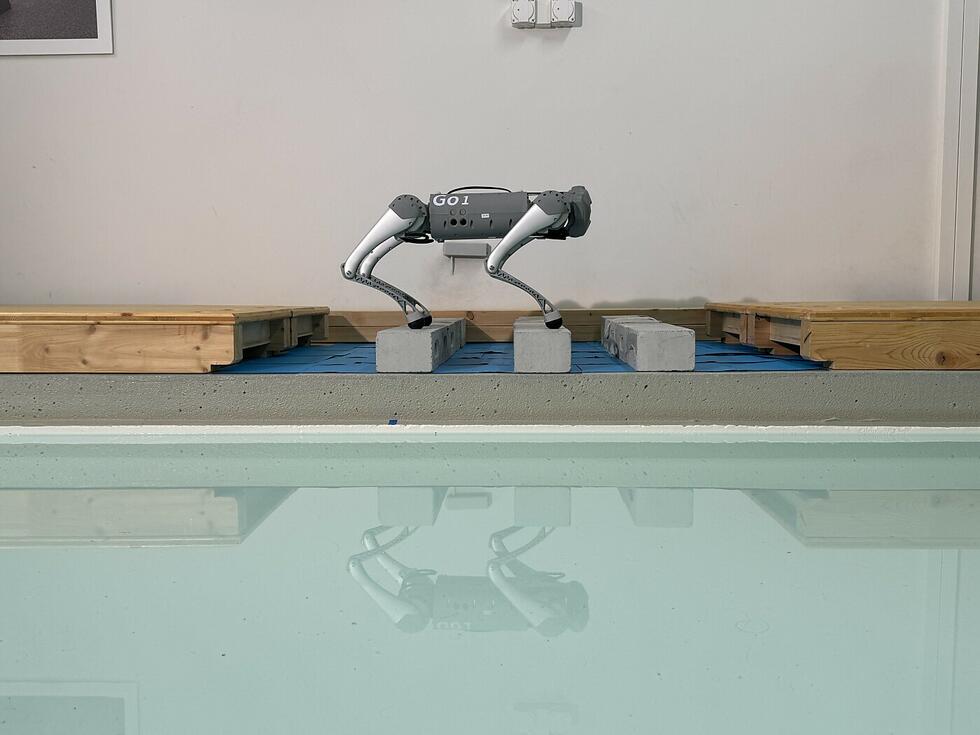

This learning mechanism is even more similar to ours: the network learns by watching scenes that “pass before its eyes,” comparing images of the same scene at different times, comparing similar images, and sometimes even performing actions (think robots, simulators, etc.), then observing the scene again modified by those actions.

Some of these methodologies go so far as to try to make Neural Networks learn the concept of objects and entities (e.g., the “pedestrian”) so that they can then create a model with what they have learned and apply it even in situations they have not yet seen. Among these algorithms, the best known are 3DETR, DINO, and VICRegL — the latter was released only a few weeks ago. DINO, in particular, already amazingly succeeds in recognizing and separating the potentially featured object from the context or background of the scene, without any human supervising this training. Even with other better-established AI methodologies, these results would have been possible only in very limited contexts.

As the ability of these new algorithms to identify and predict the behavior of objects evolves, they have already achieved an important first success: the network can be “trained” much more efficiently by feeding it with a far more limited dataset than would have been necessary with “Supervised” networks. In real-world applications, it is increasingly difficult to find usable data for commercial use, so just being able to achieve the desired results with a much more limited dataset already turns out to be a significant advantage.

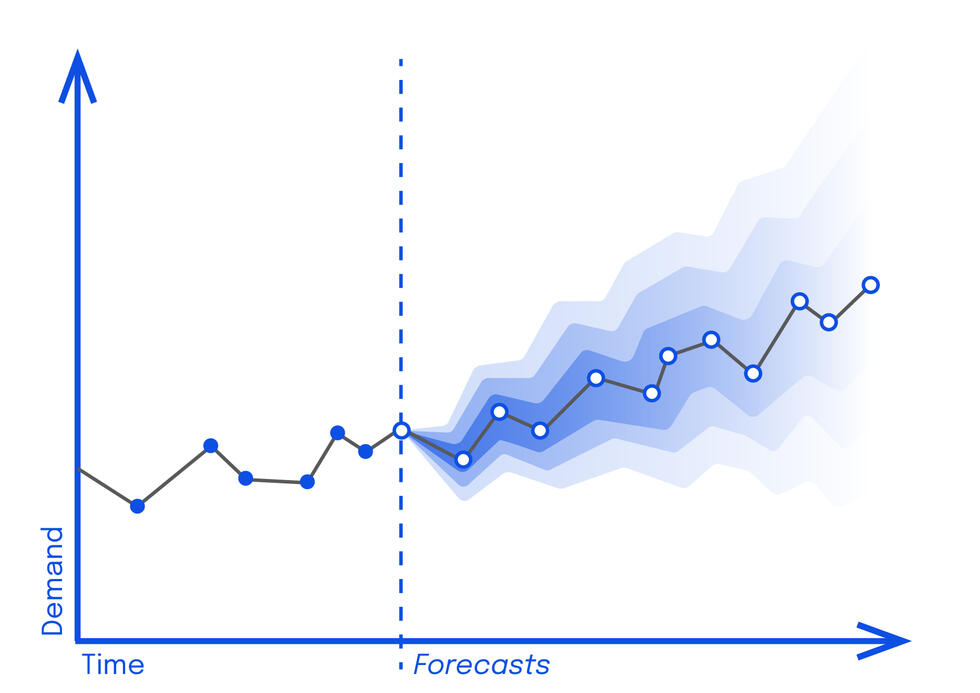

While preparing for the industrial use of these new methodologies, research has meanwhile begun on further developments of the Self-Supervised Learning concept (e.g., Energy-based Self-Supervised Learning) that aim to represent the world in even more complex ways. The future of this research seems to point toward modeling images on a probabilistic basis, that is, taking into account the variety and unpredictability of the world in which we live. Although we are still a long way from these results, it is interesting to reason about the possibilities that open up with technology that can learn autonomously and then use much more knowledge, not only of an object but also of the context and potential future outcomes, to make decisions.

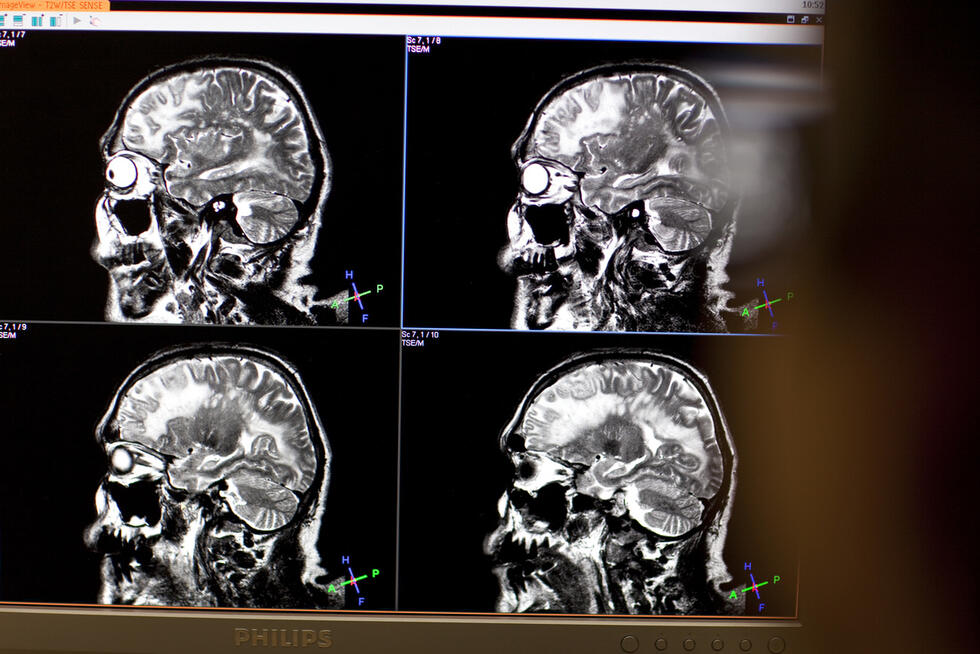

Meanwhile, in Artificialy, we are testing some of these innovative methodologies and applying them in the field of Computer Vision. In particular, we focus on the ability of these models to train efficient Neural Networks even under data scarcity conditions. We have also applied these methodologies in some real cases for our clients. For example, in a complex Medtech problem and in AI applications in urban environments where privacy regulations severely limit data accessibility or image usability.

Artificialy

Artificialy is a leading-edge center of competence that draws from the best scientific knowledge thanks to its links to renowned AI scientific institutions and attracts and retains the best talents in Data Science. Leading-edge know-how is paired with the founders' 25 years of experience in running projects with major firms and delivering measurable results to Companies.

Written by: Damiano Binaghi, Head of Computer Vision at Artificialy SA